At the time of this writing, GCP does not have a generally available non-public facing Layer 7 load balancer. While this is sure to change in the future, this article outlines a design pattern which has been proven to provide scalable and extensible application load balancing services for multiple applications running in Kubernetes pods on GKE.

When you create a service of type LoadBalancer in GKE, Kubernetes hooks into the provider (GCP in this case) on your behalf to create a Google Load Balancer, while this may be specified as INTERNAL, there are two issues:

Issue #1:

The GCP load balancer created for you is a Layer 4 TCP load balancer.

Issue #2:

The normal behaviour is for Google to enumerate all of the node pools in your GKE cluster and “automagically” create mapping GCE instance groups for each node pool for each zone the instances are deployed in. This means the entire surface area of your cluster is exposed to the external network – which may not be optimal for internal applications on a multi tenanted cluster.

The Solution:

Using Istio deployed on GKE along with the Istio Ingress Gateway along with an externally created load balancer, it is possible to get scalable HTTP load balancing along with all the normal ALB goodness (stickiness, path-based routing, host-based routing, health checks, TLS offload, etc.).

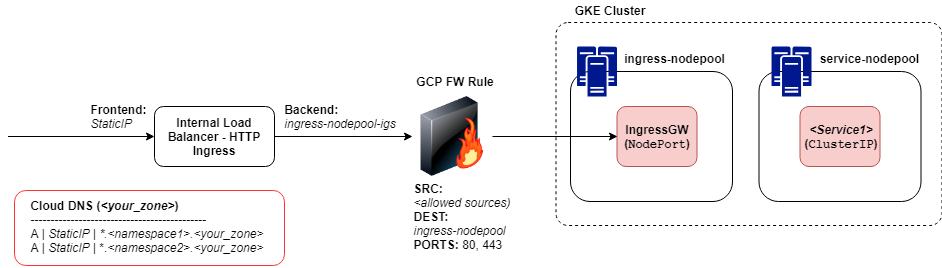

An abstract depiction of this architecture is shown here:

This can be deployed with a combination of Terraform and kubectl. The steps to deploy at a high level are:

- Create a GKE cluster with at least two node pools: ingress-nodepool and service-nodepool. Ideally create these node pools as multi-zonal for availability. You could create additional node pools for your Egress Gateway or an operations-nodepool to host Istio, etc as well.

- Deploy Istio.

- Deploy the Istio Ingress Gateway service on the ingress-nodepool using Service type NodePort.

- Create an associated Certificate Gateway using server certificates and private keys for TLS offload.

- Create a service in the service-nodepool.

- Reserve an unallocated static IP address from the node network range.

- Create an internal TCP load balancer:

- Specify the frontend as the IP address reserved in step 6.

- Specify the backend as the managed instance groups created during the node pool creation for the ingress-nodepool (ingress-nodepool-ig-a, ingress-nodepool-ig-b, ingress-nodepool-ig-c).

- Specify ports 80 and 443.

- Create a GCP Firewall Rule to allow traffic from authorized sources (network tags or CIDR ranges) to a target of the ingress-nodepool network tag.

- Create a Cloud DNS A Record for your managed zone as *.namespace.zone pointing to the IP Address assigned to the load balancer frontend in step 7.1.

- Enable Health Checks through the GCP firewall to reach the ingress-nodepool network tag at a minimum – however there is no harm in allowing these to all node pools.

The service should then be resolvable and routable from authorized internal networks (peered private VPCs or internal networks connected via VPN or Dedicated Interconnect) as:

https://_service.namespace.zone/endpoint__

The advantages of this design pattern are...

- The Ingress Gateway provides fully functional application load balancing services.

- Istio provides service discovery and routing using names and namespaces.

- The Ingress Gateway service and ingress gateway node pool can be scaled as required to meet demand.

- The Ingress Gateway is multi zonal for greater availability

if you have enjoyed this post, please consider buying me a coffee ☕ to help me keep writing!